Drones to the Rescue without GPS, Pilots or Maps

CAMBRIDGE, MA—Drones are learning to fly solo, a boon for soldiers, first responders, package delivery companies and others who want their unmanned aerial vehicles (UAVs) to do tough, dangerous and remote work for them. At a recent demonstration, a team of engineers put this idea to the test. They outfitted a commercial-off-the-shelf UAV with a set of new algorithms and showed that it could fly with no human input once it was provided a general heading, distance to travel and specific items to search for.

https://www.youtube.com/embed/vDYy3L9nvLk

The demonstration took place during Phase 2 flight tests of DARPA’s Fast Lightweight Autonomy (FLA) program. Engineers from Draper and the Massachusetts Institute of Technology (MIT) comprised a team taking part in the FLA program and the final demonstration.

Conducted in a mock town at a training facility in Perry, Georgia, aerial tests showed significant progress in urban outdoor as well as indoor autonomous flight scenarios, according to DARPA. Results included flying at increased speeds between multistory buildings and through tight alleyways while identifying objects of interest.

The Draper/MIT team made several improvements to the UAV over the Phase 1 flight tests. They reduced the number of onboard sensors to lower the cost and lighten their UAV for higher-speed and longer-duration flight. Using neural nets, the onboard computer was able to recognize objects of interest, such as cars, and pinpoint their locations. The UAV explored the environment at speeds up to 20 mph, maneuvering around buildings, over fences and under tree branches, and then returned to the operator.

The UAV test also demonstrated the value for this type of technology for use by the military.

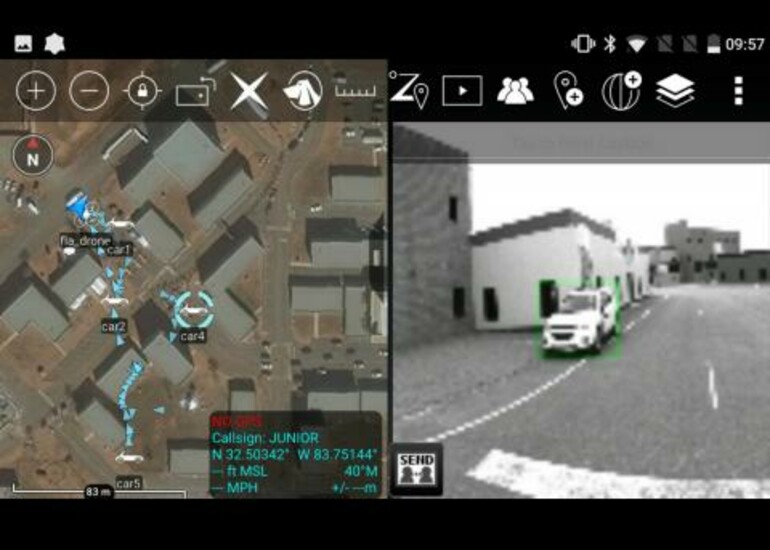

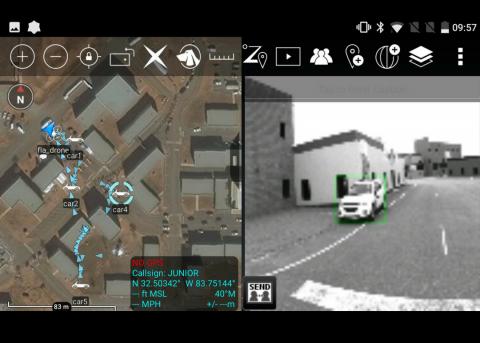

The team incorporated the ability to sync data collected by the air vehicle with a special handheld app already deployed to military forces. Using an optional Wi-Fi link from the aircraft (that the human team member could turn on or off as desired), the UAV can send real-time imagery of the detected objects of interest. Using the app, the object locations can be overlaid on a satellite map with clickable images taken by the vehicle. Human team members can then livestream the map and images from the vehicle to their app-enabled handheld devices during the mission.

“Beyond military uses, there are many other applications for a ‘thinking drone’,” said Ted Steiner, senior member of Draper’s technical staff. “There are many places we want to go where it is not safe for humans, including structures that are no longer safe or are not known to be safe. After a catastrophe such as an earthquake or a tornado, first responders want to identify people in danger or needing help as quickly as possible. FLA technology will help enable the ability to quickly search these unknown environments without the need for expert drone pilots to be available on-site.”

Co-investigators on the program included Professors Nicholas Roy (MIT Computer Science & Artificial Intelligence Lab), Jonathan How (MIT Laboratory for Information and Decision Systems) and Russ Tedrake (MIT Computer Science & Artificial Intelligence Lab).

Draper’s work on the FLA program builds on its legacy in autonomous systems, algorithms and positioning, navigation and timing. In addition to working with autonomous systems, Draper has assisted U.S. government agencies with projects including cybersecurity, technology protection and miniature cryptography for high stress environments.

Funding for the study was provided by the U.S. Defense Advanced Research Projects Agency’s Fast Lightweight Autonomy Program.

Released August 14, 2018